Les reponses sont maintenant affichees !Intro

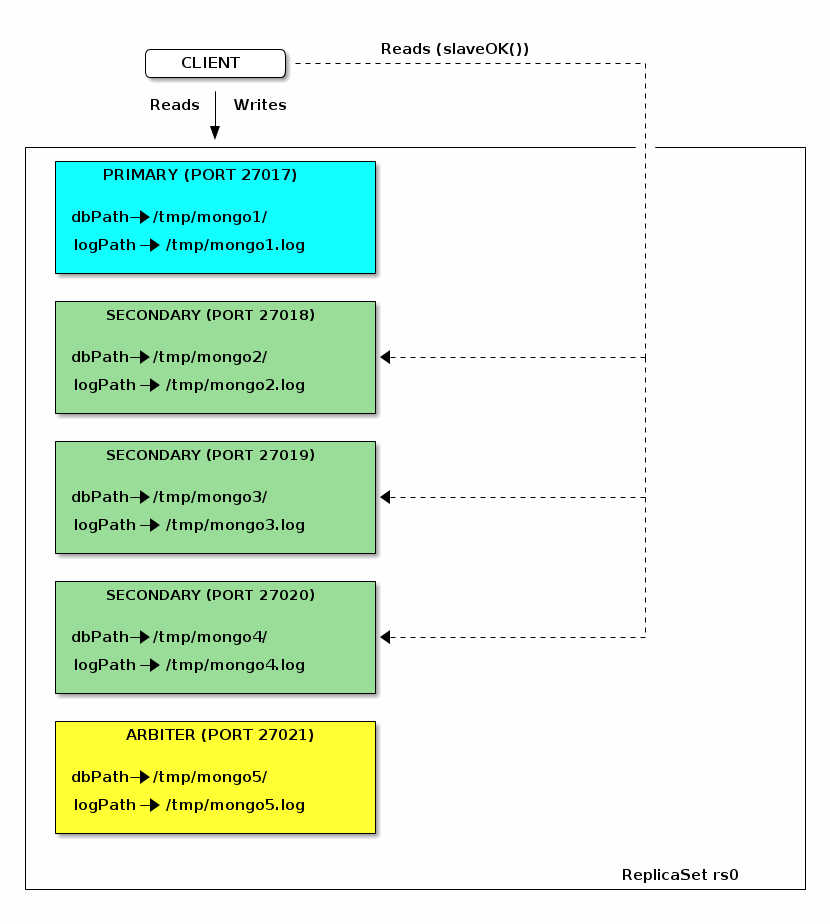

Pour ce TP vous allez utiliser les binaires de mongodb que vous avez telecharge pour le TP precedent. Nous allons mettre en place un replica set de 4 noeuds et un arbitre. Puis nous allons rajouter du sharding et nous allons suivre le re-balancing des replica-set.

Deployment d’un replica set

Nous allons déployer un replica set qui contient 4 members qui tournent sur des ports différents. Pour se prémunir des egalitees lors de l’election d’un nouveau maître nous allons rajouter un arbitre.

L’architecture cible est la suivante:

Démarrer des instances mongod pour chaque membre du replica set

1) Démarrez quatre instances mongod qui appartiennent au meme replica-set (--replSet rs0) et qui tournent sur des port differents (27017, 27018, 27019, 2720), en arriere plan (--fork) avec des dbPath et un logpath pour chaque instance (documentation mongod) (vous pouvez utiliser par instance /tmp/mongo1 pour comme repertoire data du premier mongod ainsi que /tmp/mongo1.log pour son logpath)

mkdir /tmp/mongo1/ ; ./mongod --port 27017 --replSet rs0 --fork --logpath /tmp/mongo1.log --dbpath /tmp/mongo1

mkdir /tmp/mongo2/ ; ./mongod --port 27018 --replSet rs0 --fork --logpath /tmp/mongo2.log --dbpath /tmp/mongo2

mkdir /tmp/mongo3/ ; ./mongod --port 27019 --replSet rs0 --fork --logpath /tmp/mongo3.log --dbpath /tmp/mongo3

mkdir /tmp/mongo4/ ; ./mongod --port 27020 --replSet rs0 --fork --logpath /tmp/mongo4.log --dbpath /tmp/mongo4Initialisation du replica set

2) Lancer un shell mongo en vous connectant sur l’instance mongod qui tourne sur le port 27017 (localhost:27017)

mongo --port 27017 (1)

MongoDB shell version: 3.2.12

connecting to: 127.0.0.1:27017/test

Server has startup warnings:

2017-12-03T13:59:38.792+0100 I CONTROL [initandlisten]

2017-12-03T13:59:38.792+0100 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2017-12-03T13:59:38.792+0100 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2017-12-03T13:59:38.792+0100 I CONTROL [initandlisten]

>3) Dans le shell mongo on construit un document JSON pour la configuration du replica-set:

rsconf = {

_id: "rs0",

members: [

{

_id: 0,

host: "localhost:27017"

}

]

}4) Puis on active cette configuration en utilisant la fonction rs.initiate(rsconf). Quelle instance mongod est le PRIMARY du replica set (vous pouvez utiliser la fonction rs.status() ou regarder cette information dans les logs) ?

> rs.initiate( rsconf ) (1)

{ "ok" : 1 }

rs0:SECONDARY>[aar@wifibridge bin]$ tail -f /tmp/mongo1.log (1)

2017-12-03T15:06:56.562+0100 I REPL [conn1] replSetInitiate admin command received from client

2017-12-03T15:06:56.568+0100 I REPL [conn1] replSetInitiate config object with 1 members parses ok

2017-12-03T15:06:56.569+0100 I REPL [conn1] ******

2017-12-03T15:06:56.569+0100 I REPL [conn1] creating replication oplog of size: 990MB...

2017-12-03T15:06:56.574+0100 I STORAGE [conn1] Starting WiredTigerRecordStoreThread local.oplog.rs

2017-12-03T15:06:56.574+0100 I STORAGE [conn1] The size storer reports that the oplog contains 0 records totaling to 0 bytes

2017-12-03T15:06:56.574+0100 I STORAGE [conn1] Scanning the oplog to determine where to place markers for truncation

2017-12-03T15:06:56.577+0100 I REPL [conn1] ******

2017-12-03T15:06:56.590+0100 I INDEX [conn1] build index on: admin.system.version properties: { v: 2, key: { version: 1 }, name: "incompatible_with_version_32", ns: "admin.system.version" }

2017-12-03T15:06:56.590+0100 I INDEX [conn1] building index using bulk method; build may temporarily use up to 500 megabytes of RAM

2017-12-03T15:06:56.591+0100 I INDEX [conn1] build index done. scanned 0 total records. 0 secs

2017-12-03T15:06:56.592+0100 I COMMAND [conn1] setting featureCompatibilityVersion to 3.4

2017-12-03T15:06:56.592+0100 I REPL [conn1] New replica set config in use: { _id: "rs0", version: 1, protocolVersion: 1, members: [ { _id: 0, host: "localhost:27017", arbiterOnly: false, buildIndexes: true, hidden: false, priority: 1.0, tags: {}, slaveDelay: 0, votes: 1 } ], settings: { chainingAllowed: true, heartbeatIntervalMillis: 2000, heartbeatTimeoutSecs: 10, electionTimeoutMillis: 10000, catchUpTimeoutMillis: 60000, getLastErrorModes: {}, getLastErrorDefaults: { w: 1, wtimeout: 0 }, replicaSetId: ObjectId('5a2405001bdd1e0fddbac66a') } }

2017-12-03T15:06:56.592+0100 I REPL [conn1] This node is localhost:27017 in the config

2017-12-03T15:06:56.592+0100 I REPL [conn1] transition to STARTUP2

2017-12-03T15:06:56.592+0100 I REPL [conn1] Starting replication storage threads

2017-12-03T15:06:56.593+0100 I REPL [conn1] Starting replication fetcher thread

2017-12-03T15:06:56.593+0100 I REPL [conn1] Starting replication applier thread

2017-12-03T15:06:56.593+0100 I REPL [conn1] Starting replication reporter thread

2017-12-03T15:06:56.593+0100 I REPL [rsSync] transition to RECOVERING

2017-12-03T15:06:56.594+0100 I REPL [rsSync] transition to SECONDARY

2017-12-03T15:06:56.595+0100 I REPL [rsSync] conducting a dry run election to see if we could be elected

2017-12-03T15:06:56.595+0100 I REPL [ReplicationExecutor] dry election run succeeded, running for election

2017-12-03T15:06:56.598+0100 I REPL [ReplicationExecutor] election succeeded, assuming primary role in term 1

2017-12-03T15:06:56.598+0100 I REPL [ReplicationExecutor] transition to PRIMARY

2017-12-03T15:06:56.598+0100 I REPL [ReplicationExecutor] Entering primary catch-up mode.

2017-12-03T15:06:56.598+0100 I REPL [ReplicationExecutor] Exited primary catch-up mode.

2017-12-03T15:06:58.595+0100 I REPL [rsSync] transition to primary complete; database writes are now permitted5) Dans le shell mongo nous pouvons appeler la methode rs.conf() pour afficher l’etat mis a jour de la configuration du replicaSet

rs0:PRIMARY> rs.conf() (1)

{

"_id" : "rs0",

"version" : 1,

"protocolVersion" : NumberLong(1),

"members" : [

{

"_id" : 0,

"host" : "localhost:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : 60000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5a2405001bdd1e0fddbac66a")

}

}Nous avons fini l’initialisation du replica-set mais pour l’instant notre replica set contient un seul member (qui est le primary et tourne sur le port 27017). Nous allons maintenant rajouter les autres membres.

6) Utiliser la fonction rs.add() pour rajouter les autres membres au replica-set. Puis utiliser rs.status() pour afficher l’etat du replica-set.

rs0:PRIMARY> rs.add('localhost:27018') (1)

{ "ok" : 1 }

rs0:PRIMARY> rs.add('localhost:27019') (2)

{ "ok" : 1 }

rs0:PRIMARY> rs.add('localhost:27020') (3)

{ "ok" : 1 }

rs.conf() (4)

rs0:PRIMARY> rs.conf() (4)

{

"_id" : "rs0",

"version" : 4,

"protocolVersion" : NumberLong(1),

"members" : [

{

"_id" : 0,

"host" : "localhost:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "localhost:27018",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "localhost:27019",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 3,

"host" : "localhost:27020",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : 60000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5a2405001bdd1e0fddbac66a")

}

}Démarrer un arbitre

Un arbitre est une instance mongod qui ne contient pas de donnes et qui est rajouté au replica set en lui spécifiant le paramètre "arbiterOnly" lors de l’ajout via rs.add().

7) Lancez une nouvelle instance mongod sur le port 27021, rajoutez-la au replica-set en tant que arbitre .

[aar@wifibridge bin]$ mkdir /tmp/mongo5/ ; ./mongod --port 27021 --replSet rs0 --fork --logpath /tmp/mongo5.log --dbpath /tmp/mongo5rs0:PRIMARY> rs.add('localhost:27021',true)

{ "ok" : 1 }

rs0:PRIMARY> rs.conf()

{

"_id" : "rs0",

"version" : 5,

"protocolVersion" : NumberLong(1),

"members" : [

...

{

"_id" : 4,

"host" : "localhost:27021",

"arbiterOnly" : true, (1)

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

....

}Coherence et replication

8) Utiliser mongoimport pour importer les emails d’enron.

./mongoimport --db test --collection emails --file ../../enron.json9) Ecrivez la requête qui affiche tous les emails de notre base. Exécuter cette requête sur le maître/slave avec des valeurs différentes pour le paramètre readConcern.

db.emails.find( { } ).readConcern(TODO).maxTimeMS(10000)rs0:PRIMARY> db.emails.find( { } ).readConcern("local").maxTimeMS(10000)

rs0:PRIMARY> db.emails.find( { } ).readConcern("linearizable").maxTimeMS(10000)

rs0:PRIMARY> db.emails.find( { } ).readConcern("majority").maxTimeMS(10000)

Error: error: {

"ok" : 0,

"errmsg" : "Majority read concern requested, but server was not started with --enableMajorityReadConcern.",

"code" : 148,

"codeName" : "ReadConcernMajorityNotEnabled"

}

rs0:SECONDARY> db.email.find()

Error: error: {

"ok" : 0,

"errmsg" : "not master and slaveOk=false",

"code" : 13435,

"codeName" : "NotMasterNoSlaveOk"

}

rs0:SECONDARY> rs.slaveOk()

rs0:SECONDARY> db.emails.findOne()Modifier la configuration du replicaset pour permettre la lecture depuis le slaves (rs.slaveOK())

11) Inserer un nouveau email dans db.emails. Rajouter un writeConcern a votre requete pour s’assurer que l’update est confirme sur 2 noeuds avec un timeout de 5 secondes.

db.emails.insert(

{email:"test write concern", sender:"toto"},

{ writeConcern: { w: 2, wtimeout: 5000 } } );Sharding

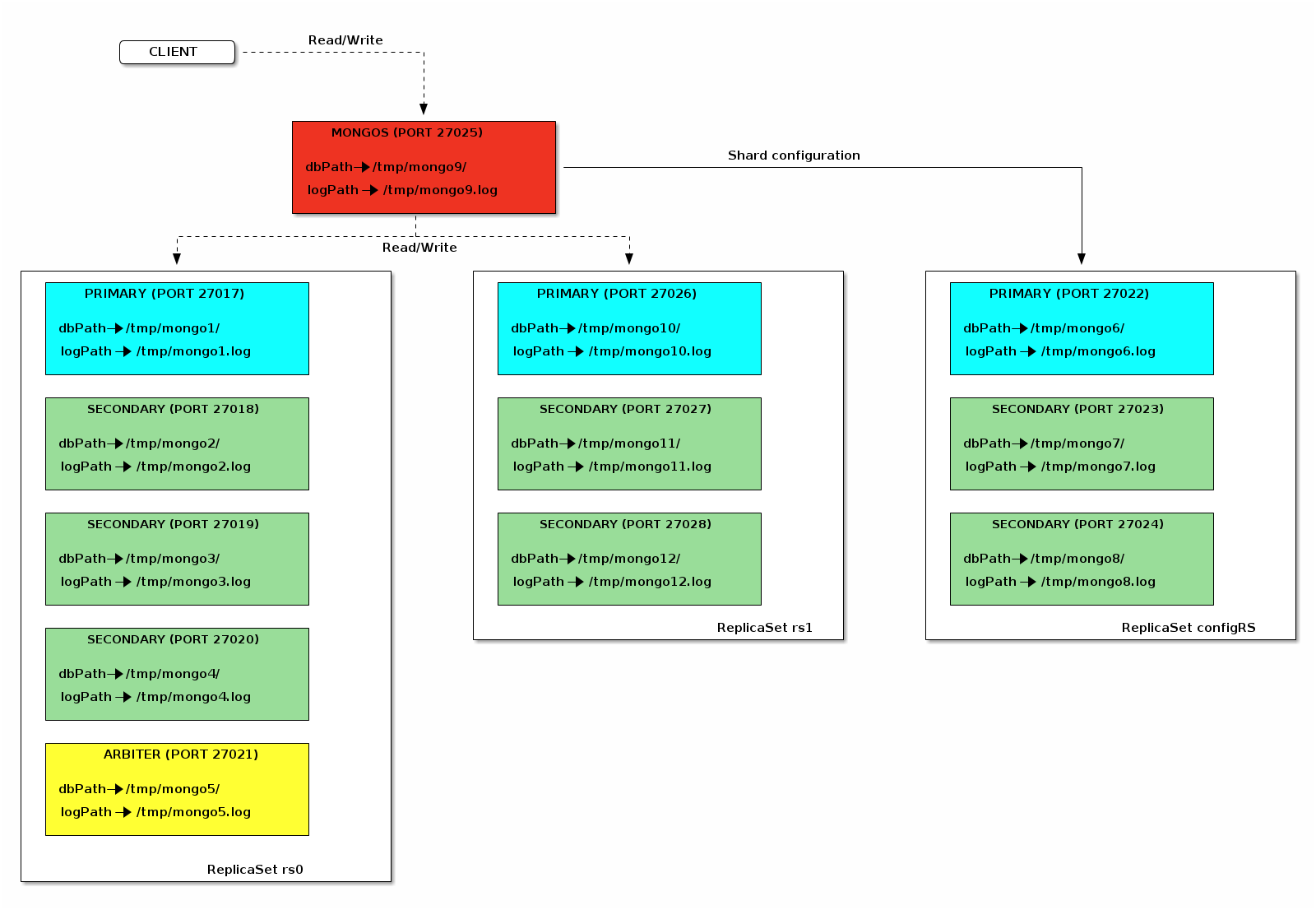

Nous allons rajouter du sharding dans notre clusteur. L’architecture cible est la suivante:

Nous allons commencer par reconfigurer la replica set rs0.

12) Redemarer les mongod secondaires en rajoutant l’option --shardsvr (! mettre les bon numero de pids qui correspond aux mongo2-mongo5)

[aar@wifibridge bin]$ grep -n "pid" /tmp/mongo*.log (1) /tmp/mongo1.log:1:2017-12-03T13:59:38.725+0100 I CONTROL [initandlisten] MongoDB starting : pid=30864 port=27017 dbpath=/tmp/mongo1 64-bit host=wifibridge.home /tmp/mongo2.log:1:2017-12-03T14:00:01.818+0100 I CONTROL [initandlisten] MongoDB starting : pid=31715 port=27018 dbpath=/tmp/mongo2 64-bit host=wifibridge.home /tmp/mongo3.log:1:2017-12-03T14:00:14.999+0100 I CONTROL [initandlisten] MongoDB starting : pid=32230 port=27019 dbpath=/tmp/mongo3 64-bit host=wifibridge.home /tmp/mongo4.log:1:2017-12-03T14:00:28.916+0100 I CONTROL [initandlisten] MongoDB starting : pid=326 port=27020 dbpath=/tmp/mongo4 64-bit host=wifibridge.home /tmp/mongo5.log:1:2017-12-03T15:57:23.650+0100 I CONTROL [initandlisten] MongoDB starting : pid=3617 port=27021 dbpath=/tmp/mongo5 64-bit host=wifibridge.home kill -9 31715 32230 326 3617 (2) ./mongod --port 27018 --replSet rs0 --fork --logpath /tmp/mongo2.log --dbpath /tmp/mongo2 --shardsvr (3) ./mongod --port 27019 --replSet rs0 --fork --logpath /tmp/mongo3.log --dbpath /tmp/mongo3 --shardsvr (4) ./mongod --port 27020 --replSet rs0 --fork --logpath /tmp/mongo4.log --dbpath /tmp/mongo4 --shardsvr (5) ./mongod --port 27021 --replSet rs0 --fork --logpath /tmp/mongo5.log --dbpath /tmp/mongo5 --shardsvr (6)

13) Dites au primaire (27017) de laisser la main a un des secondaires (rs.stepDown() dans le shell mongo)

rs0:PRIMARY> rs.stepDown(); (1)

2017-12-03T22:34:06.059+0100 E QUERY [thread1] Error: error doing query: failed: network error while attempting to run command 'replSetStepDown' on host 'localhost:27017' :

DB.prototype.runCommand@src/mongo/shell/db.js:135:1

DB.prototype.adminCommand@src/mongo/shell/db.js:153:16

rs.stepDown@src/mongo/shell/utils.js:1182:12

@(shell):1:1

2017-12-03T22:34:06.060+0100 I NETWORK [thread1] trying reconnect to localhost:27017 (127.0.0.1) failed

2017-12-03T22:34:06.061+0100 I NETWORK [thread1] reconnect localhost:27017 (127.0.0.1) ok

rs0:SECONDARY>14) Redémarrez l’ancien primaire (mongod qui ecoutait sur le port 27017) en lui rajoutant l’option shardsvr (!utiliser le bon PID!)

[aar@wifibridge bin]$ grep -n "pid" /tmp/mongo1.log (1)

1:2017-12-03T22:22:10.524+0100 I CONTROL [initandlisten] MongoDB starting : pid=18012 port=27017 dbpath=/tmp/mongo1 64-bit host=wifibridge.home

[aar@wifibridge bin]$ kill -9 18012 (2)

./mongod --port 27017 --replSet rs0 --fork --logpath /tmp/mongo1.log --dbpath /tmp/mongo1 --shardsvr (3)Deployement d’un replica set pour le serveurs de configuration

15) Deployer un nouveau replica set avec 3 instances mongod sur les ports 27022, 27023, 27024.

mkdir /tmp/mongo6/ ; ./mongod --port 27022 --replSet configRs --fork --logpath /tmp/mongo6.log --dbpath /tmp/mongo6 --configsvr (1)

mkdir /tmp/mongo7/ ; ./mongod --port 27023 --replSet configRs --fork --logpath /tmp/mongo7.log --dbpath /tmp/mongo7 --configsvr (2)

mkdir /tmp/mongo8/ ; ./mongod --port 27024 --replSet configRs --fork --logpath /tmp/mongo8.log --dbpath /tmp/mongo8 --configsvr (3)16) Connectez-vous sur l’instance 27022 (primary du replica set configRs) et initialisez la replicaSet configRs

mongo localhost:27022 (1)

rs.initiate( {

_id: "configRs",

configsvr: true,

members: [

{ _id: 0, host: "localhost:27022" },

{ _id: 1, host: "localhost:27023" },

{ _id: 2, host: "localhost:27024" }

]

} ) (2)17) Démarrez une instance mongos comme config serveur pour le replica set configRs:

mkdir /tmp/mongo9/ ; ./mongos --port 27025 --fork --logpath /tmp/mongo9.log --configdb configRs/localhost:27022,localhost:27023,localhost:27024(1)18) Connectez un shell mongo sur le mongos:

mongo localhost:27025 (1)

[aar@wifibridge bin]$ mongo localhost:27025

MongoDB shell version: 3.2.12

connecting to: localhost:27025/test

Server has startup warnings:

2017-12-03T22:42:31.172+0100 I CONTROL [main]

2017-12-03T22:42:31.172+0100 I CONTROL [main] ** WARNING: Access control is not enabled for the database.

2017-12-03T22:42:31.172+0100 I CONTROL [main] ** Read and write access to data and configuration is unrestricted.

2017-12-03T22:42:31.172+0100 I CONTROL [main]

mongos> sh.addShard( "rs0/localhost:27017,localhost:27018,localhost:27019,localhost:27020,localhost:27021"); (2)

{ "shardAdded" : "rs0", "ok" : 1 }19) Démarrer encore 3 instances mongod dans un deuxième replica set (rs1) et rajouter le nouveau shard :

mkdir /tmp/mongo10; ./mongod --port 27026 --replSet rs1 --fork --logpath /tmp/mongo10.log --dbpath /tmp/mongo10 --shardsvr (1)

mkdir /tmp/mongo11; ./mongod --port 27027 --replSet rs1 --fork --logpath /tmp/mongo11.log --dbpath /tmp/mongo11 --shardsvr (2)

mkdir /tmp/mongo12; ./mongod --port 27028 --replSet rs1 --fork --logpath /tmp/mongo12.log --dbpath /tmp/mongo12 --shardsvr (3)

mongo localhost:27026(4)

rs.initiate( {

_id : "rs1",

members: [ { _id : 0, host : "localhost:27026" },

{ _id : 1, host : "localhost:27027" },

{ _id : 2, host : "localhost:27028" }

]

}) (5)

mongo localhost:27025/admin (6)

sh.addShard( "rs1/localhost:27026,localhost:27027,localhost:27028" ) (7)

mongos> sh.status() (8)

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5a24e7ae0500bf4227fbec5c")

}

shards:

{ "_id" : "rs0", "host" : "rs0/localhost:27017,localhost:27018,localhost:27019,localhost:27020", "state" : 1 }

{ "_id" : "rs1", "host" : "rs1/localhost:27026,localhost:27027,localhost:27028", "state" : 1 }

active mongoses:

"3.4.10" : 1

balancer:

Currently enabled: yes

Currently running: yes

Balancer lock taken at Mon Dec 04 2017 07:14:10 GMT+0100 (CET) by ConfigServer:Balancer

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "test", "primary" : "rs0", "partitioned" : false }Sharder une collection

20) Dans le shell conecte au mongos activer le sharding dans la base test

mongos> sh.enableSharding( "test" )

{ "ok" : 1 }21) Sharder la collection emails en sur l’attribut sender

mongos> use test; (1)

switched to db test

mongos> db.emails.createIndex({sender:"hashed"}); (2)

{

"createdCollectionAutomatically" : false,

"numIndexesBefore" : 1,

"numIndexesAfter" : 2,

"ok" : 1

}mongos> sh.shardCollection("test.emails",{sender:"hashed"}); (1)

{ "collectionsharded" : "test.emails", "ok" : 1 }22) Verifier que la collection est shardee via db.stats() et db.printShardingStatus() . Expliquez ce que vous voyez.

mongos> use test; (1)

switched to db test

mongos> db.stats() (2)

mongos> db.emails.getShardDistribution() (3)

Shard rs0 at rs0/localhost:27017,localhost:27018,localhost:27019,localhost:27020

data : 14.86MiB docs : 5932 chunks : 1

estimated data per chunk : 14.86MiB

estimated docs per chunk : 5932

Totals

data : 14.86MiB docs : 5932 chunks : 1

Shard rs0 contains 100% data, 100% docs in cluster, avg obj size on shard : 2KiB

// On a un seul chunk car l'espace sur le disk de la collection est < chunkSize=64MB23) Nous allons generer une nouvelle collection d’une taille un peu plus importante et nous allons regarder le balancing dans les shards.

| En tout, les 8 instances mongo auront besoin d’au moins 16GB de RAM !!! |

mongos> use test (1)

switched to db test

mongos> var bulk = db.big_collection.initializeUnorderedBulkOp(); (2)

mongos> people = ["Marc", "Bill", "George", "Eliot", "Matt", "Trey", "Tracy", "Greg", "Steve", "Kristina", "Katie", "Jeff"];

[

"Marc",

"Bill",

"George",

"Eliot",

"Matt",

"Trey",

"Tracy",

"Greg",

"Steve",

"Kristina",

"Katie",

"Jeff"

]

mongos> for(var i=0; i<2000000; i++){

... user_id = i;

... name = people[Math.floor(Math.random()*people.length)];

... number = Math.floor(Math.random()*10001);

... bulk.insert( { "user_id":user_id, "name":name, "number":number });

... } (3)

mongos> bulk.execute(); (4)

BulkWriteResult({

"writeErrors" : [ ],

"writeConcernErrors" : [ ],

"nInserted" : 2000000,

"nUpserted" : 0,

"nMatched" : 0,

"nModified" : 0,

"nRemoved" : 0,

"upserted" : [ ]

})

mongos> db.big_collection.createIndex( { number : 1 } ) (5)

{

"raw" : {

"rs0/localhost:27017,localhost:27018,localhost:27019,localhost:27020" : {

"createdCollectionAutomatically" : false,

"numIndexesBefore" : 1,

"numIndexesAfter" : 2,

"ok" : 1,

"$gleStats" : {

"lastOpTime" : {

"ts" : Timestamp(1512389214, 1),

"t" : NumberLong(6)

},

"electionId" : ObjectId("7fffffff0000000000000006")

}

}

},

"ok" : 1

}

mongos> sh.shardCollection("test.big_collection",{"number":1}); (6)

{ "collectionsharded" : "test.big_collection", "ok" : 1 }

mongos> db.big_collection.getShardDistribution(); (7)

Shard rs0 at rs0/localhost:27017,localhost:27018,localhost:27019,localhost:27020

data : 122.41MiB docs : 1812256 chunks : 5

estimated data per chunk : 24.48MiB

estimated docs per chunk : 362451

Shard rs1 at rs1/localhost:27026,localhost:27027,localhost:27028

data : 32.36MiB docs : 479181 chunks : 2

estimated data per chunk : 16.18MiB

estimated docs per chunk : 239590

Totals

data : 154.78MiB docs : 2291437 chunks : 7

Shard rs0 contains 79.08% data, 79.08% docs in cluster, avg obj size on shard : 70B

Shard rs1 contains 20.91% data, 20.91% docs in cluster, avg obj size on shard : 70B

mongos> db.big_collection.getShardDistribution(); (8)

Shard rs0 at rs0/localhost:27017,localhost:27018,localhost:27019,localhost:27020

data : 85.85MiB docs : 1270900 chunks : 4

estimated data per chunk : 21.46MiB

estimated docs per chunk : 317725

Shard rs1 at rs1/localhost:27026,localhost:27027,localhost:27028

data : 49.25MiB docs : 729100 chunks : 3

estimated data per chunk : 16.41MiB

estimated docs per chunk : 243033

Totals

data : 135.1MiB docs : 2000000 chunks : 7

Shard rs0 contains 63.54% data, 63.54% docs in cluster, avg obj size on shard : 70B

Shard rs1 contains 36.45% data, 36.45% docs in cluster, avg obj size on shard : 70B